Increasing trust in analytics data by giving users super-powers

Giving users more visibility, control and the ability to troubleshoot their analytics system.

ROLE

Product Design owner

TEAM

1 PM, 2 Engineers

TIMELINE

2 sprints (~ 4 wk) • 2023

Product Design owner

1 PM, 2 Engineers

2 sprints (~ 4 wk) • 2023

Whatfix is primarily known as a Digital Adoption Platform (DAP) provider. Its core tools, including Whatfix Studio, were originally built to enable L&D teams and product teams to create in-app guidance content for improving user onboarding and task completion. However, as Whatfix expanded into Product Analytics (PA), these same tools were adapted to serve a very different purpose: capturing and analyzing user behavior across applications.

Whatfix Product Analytics (PA) enables Product Managers (PMs) to manually define trackers called 'User Actions' that track how users interact with their applications. This is done using Whatfix Studio, which can be launched through a browser extension and appears on top of the user's application via an iFrame. PMs select UI elements, define a trigger (like click or hover), and save the User Action. Once saved, they can view usage metrics for these actions in the Whatfix web app.

Initial state - User Action creation workflow

There are two primary user personas within the general umbrella of PMs:

Focus on employee-facing apps (e.g., Salesforce, Workday, SAP Suite). They do not have access to the app code and rely on Whatfix's no-code platform to define and track User Actions.

Work on shipping customer-facing products and have access to their application's codebase. They tend to be more technically proficient and comfortable troubleshooting issues on their own.

User trust in Whatfix PA data was low, and this key feedback surfaced across multiple channels—ProductBoard tickets, NPS responses, and customer conversations:

"These numbers don't make any sense"

"I forget where a User Action was created. It's painful to keep switching tabs just to confirm."

"We've had data issues before. Now I don't trust the numbers."

The Studio experience offered no visibility into what had been created unless the user manually went back to the Whatfix web app, located the User Action, clicked edit, and relaunched Studio in a new tab just to view a screenshot of the target element. Validating functionality was even harder—users had to simulate the end-user experience and then wait for analytics to reflect the interaction.

Manually testing User Actions by simulating the end-user

Our mission was to increase user trust in Product Analytics data. Of course, even technically-savvy users like PMs cannot fix/analyze application-level technical issues on their own. But they don't want to be forced to contact support or a Customer Success Executive for minor issues without being able to figure out anything.

We want to help users feel more confident using Product Analytics by addressing two core issues:

One proposed idea aimed to further build trust in data quality by allowing users to hover over highlighted elements (those with associated User Actions) and view real-time tooltips showing:

This would have provided instant access to actionable context, directly overlaid on the application interface. However, we had to drop this idea due to technical limitations:

We've documented this idea for future exploration once performance optimizations are available, as it received internal excitement during early brainstorming.

We started to solve the problem of visibility by highlighting User Actions on the screen. This helps users identify and remember where they have already created user actions so that they wouldn't create duplicates (this actually happened a lot).

Fun fact:

We actually piggybacked on an emergent solution here – when customers raised this issue, our post-sales team often used a console command that temporarily triggers this highlighted state which they used for debugging. Slowly, customers also got to see it and asked how they could use this too. It just so happened that a potential solution had been tested and validated with customers completely inadvertently!

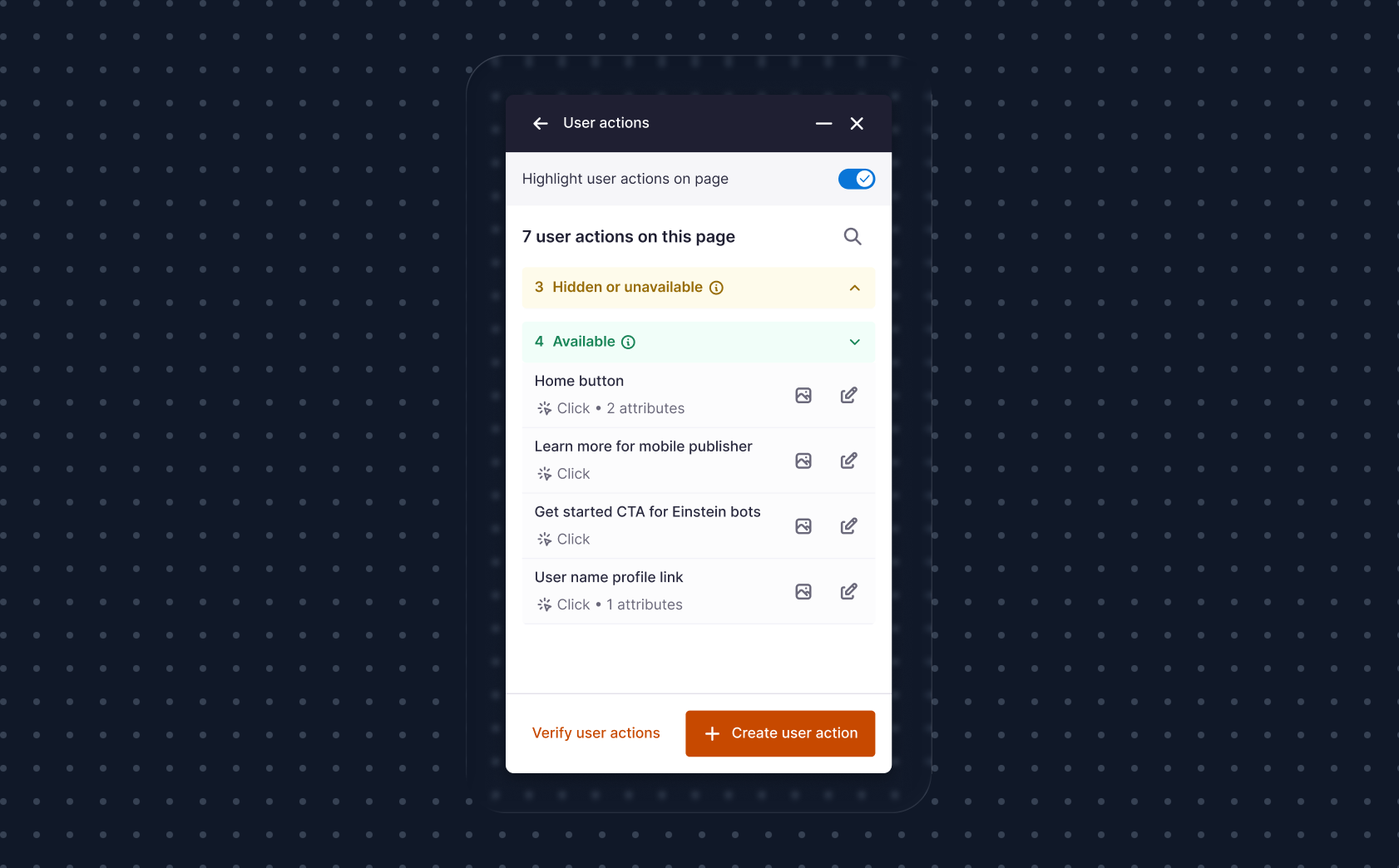

We wanted to list all the User Actions in the webpage that the user is currently on, so that they could view, locate and edit those User Actions while within the context of the application that they use. The first hurdle we encountered here was that we couldn't always locate every single User Action that has been created on a given page. Whatfix's ScreenSense algorithm analyzes the HTML DOM of the page to find the elements, but ones that aren't rendered or aren't visible might not get detected. So we had to group them into two categories:

By default, the 'hidden or unavailable' section was collapsed and the 'available' section was expanded. Why? If the first accordion was expanded by default, the second one might not be seen by the user (on the first fold).

It was not enough to just let users know that we couldn't locate some of the User Actions. If you can recall, the User Action creation process shows that a screenshot is captured which has a red box highlighting the location of the element. And if the user selected an element that was hidden normally (inside an accordion, within a dropdown, appears on hover, etc.), the screenshot would also show the exact state in which the element was visible (and available!).

So we added the capability for users to view the screenshot of any User Action within Studio post-creation as well, so that they could utilise it to find that elusive element. Once it's visible on the screen, Studio will instantly update and show the User Action under the 'available' accordion.

The end-to-end workflow of finding a User Action that is hidden

The key feedback about data quality was that users even one anomaly or issue called into question the integrity of the entire system, and left them unsure if a User Action is working as intended.

Verifying if User Actions are working as intended

Whatfix Studio's Preview Mode needed to be activated to detect and list User Actions in real-time, and it needed a full page refresh to take effect– this was a disruptive experience we had to design around.

We had to figure out a way to convince users to refresh their page (and Studio along with it) so that they could access the new experience which they didn't know about yet (or how it helps them). This limitation could severely affect the top of the funnel for this feature as users might just drop-off without going any further in.

Iterations on how we can tell users that they should refresh to 'unlock' this improved experience

This is how the final solution looked like – we tried to 'show not tell' and it was enough to pique users' interest enough that they did end up adopting the feature much more easily than we expected.

The selected solution - also shows how much extra time it takes for Studio to load

We tested our prototypes with:

"This helps me troubleshoot issues and test my User Actions very easily" - PM at a B2B SaaS company

"It's really useful to highlight the existing User Actions on the screen" - Product owner at a large enterprise

The feedback was overwhelmingly positive, and we were confident in our design decisions and were able to justify them to stakeholders. Users appreciated the clarity, and being able to self-diagnose issues boosted their confidence in the analytics system.

Post-launch,

👑 Our team won an internal award called 'The Self-Serviceability Maestros' for delivering this feature.

Despite initial stakeholder skepticism, we proceeded with the release based on our validation and completed development work. The feature's success directly influenced broader product strategy, inspiring 'Whatfix Diagnostics'—a similar capability for DAP content that launched over a year later and was very well-received by DAP customers.